Wow, that was painful. So much more painful than it needed to be.

My Home lab environment is made up of four servers:

- A Dell PE1950 II with 4x1T drives — this runs the VMs for my home network.

- A Dell PE1950 III with no drives — this will run the VMs I need for testing work-related things, mostly.

- A Dell R410 with a bad BMC, currently unused.

- A SuperMicro server with a slowly-failing backplane. I was using this for work-related things, but because of the backplane, the RAID array is degraded and it beeps continuously while powered on. I try to leave it off, for the sanity of my family, and in turn, me.

I had the bright idea to upgrade the RAM in the two PE1950s. One had 16G, the other had 4G, and both supported up to 64G. I bought the memory kits, and waited.

Yesterday they arrived, and I set upon installing the memory. I powered on the 1950 II, and…nothing happened. I checked the video cable, I plugged into the front, the back, I bypassed the KVM and plugged in direct. No joy.

I powered on the 1950 III, and it showed the full 64G. Something is wrong with the II. Let’s troubleshoot.

I put the old RAM back in the 1950 II, and it started. I immediately noted that the BIOS version was different, and I recalled the notes about max-memory for the 1950 II being that conditional on a minimum BIOS version. Clearly, it needs to be upgraded. And that’s where the problems really started.

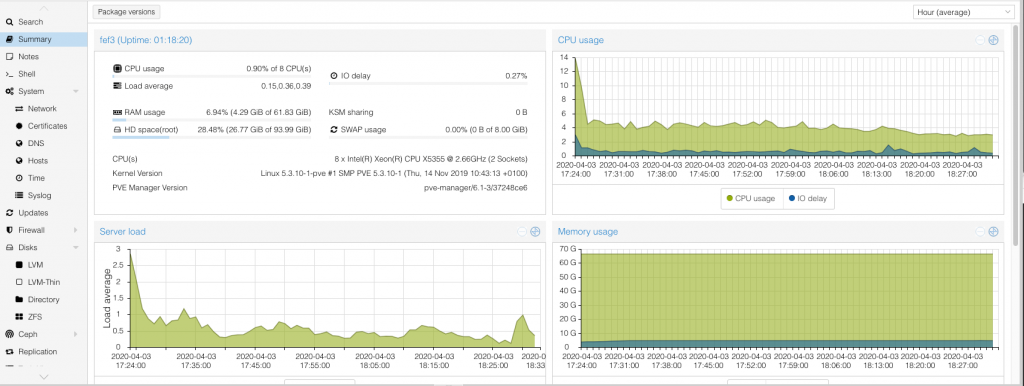

On my hardware, I mostly run Proxmox. If you haven’t heard of it, Proxmox is a virtualization system which is built on a Debian OS, and pulls together KVM virtual machines and OpenVZ containers in a single platform.

Dell issues their BIOS updates mosttly in one of two formats: for Linux (Redhat-based), and for Windows. There are other variants, but those are the two big ones. Well, here-in lies the problem — the Linux method relies on specific Redhat packages being installed, and Proxmox ain’t Redhat.

I tried using FreeDOS, but the Windows package requires a Win32 environment and wouldn’t install. I tried using CentOS 7 Live, but even after installing the packages and running the upgrade, the BIOS update failed. In the end I pulled the RAID card and drives out of the 1950 II, installed the HBA from the III and an old drive I had, and installed an Evaluation copy of Windows Server 2012 for about an hour. I was able to download the BIOS update and the BMC update and install them successfully.

After a full day of fighting with it, I was able to put the 64G of memory back into it, swap the controller and disks back in, and watched it boot back into Proxmox. Which wouldn’t talk to the network, because for some reason the names of the NICs had changed? That was an easy fix — rename the interfaces in the /etc/network/interfaces file, down the vmbr0 interface, start it back up again, and we’re away laughing.

So the end result is simple: Even if you’re a die-hard Linux user (and I certainly don’t proclaim myself to be one), sometimes the answer is to just install Windows.

Now I’m just waiting for the RAID array to rebuild — because one of the drives died while I was in the middle of all this. Fortunately I had a spare, and replacement parts for the spares drawer have been ordered.